2x faster than Encore – 1 year later

Author: saltyaom – 14 Nov 2025

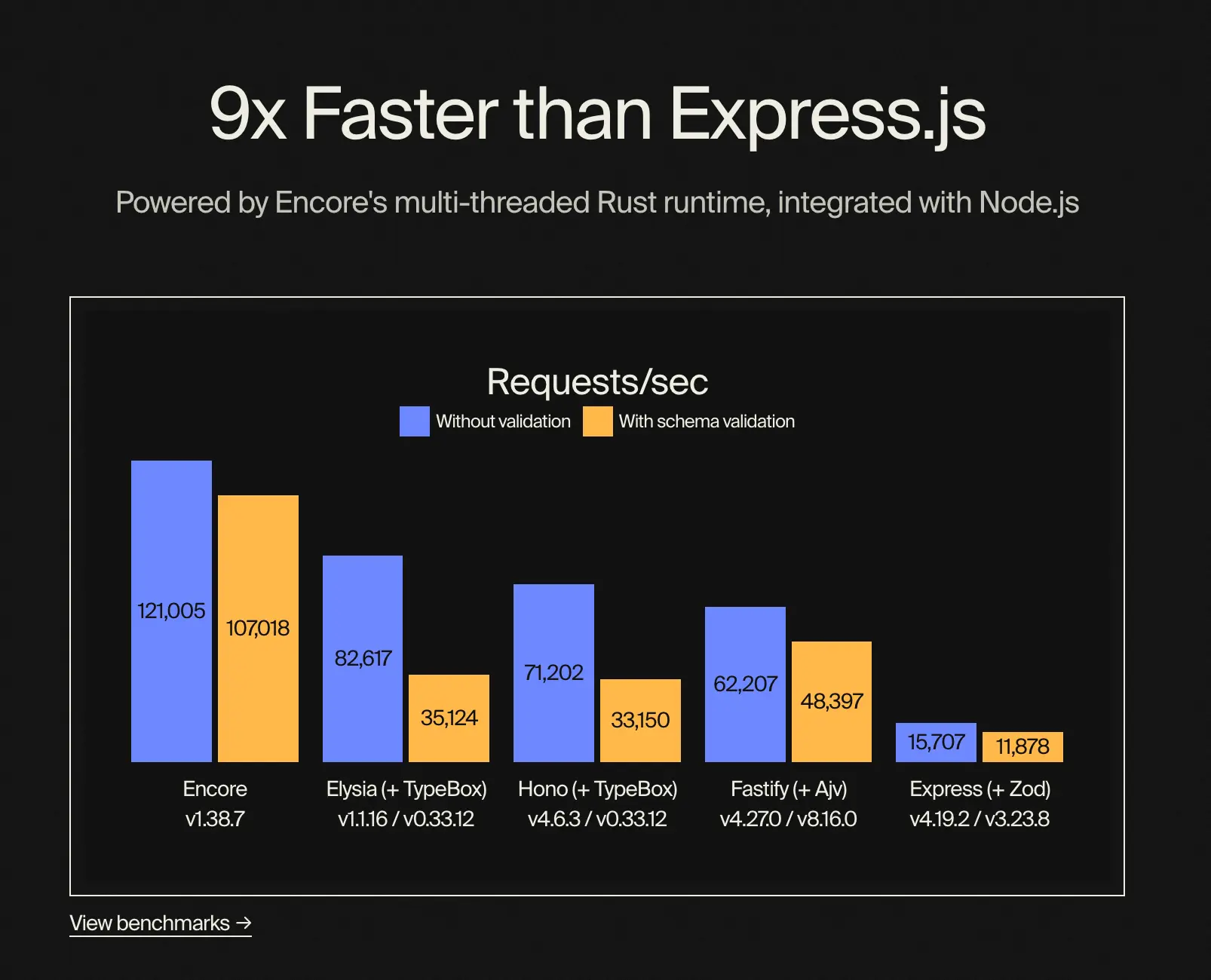

On Jun 17 2024, Encore published a blog post, “9x faster than Express.js, 3x faster than ElysiaJS & Hono”, claiming that Encore was faster than Elysia & Hono by 3×.

Today, after 1.5 years of development, Elysia is now 2× faster than Encore in the same benchmark.

A little introduction

EncoreTS is a framework powered by multi‑threaded Rust runtime with a binding for Node.js.

It leverages Rust’s performance and safety features to build high‑performance web applications.

Encore claims to be faster than Elysia & Hono by utilizing Rust for performance‑critical tasks, while still providing a familiar JavaScript/TypeScript interface for developers.

Benchmark on EncoreTS homepage

After 1.5 years later, we revisited the benchmark to see how Elysia performs against Encore.

Revisiting the benchmark

The benchmark is publicly available on

- Encore’s GitHub

- our fork to run the benchmark with the latest version of Elysia.

After some inspection, we noticed that the original benchmark for Elysia was not optimised for production. We made the following changes to ensure a fair comparison:

- Add

bun compileto the Elysia script to optimise for production - Update Elysia bare request to use static resources

- Due to machine specification, we updated OHA concurrency from

150to450to scale the upper limit

Lastly, we updated all the necessary dependencies to their latest versions:

| Framework | Version |

|---|---|

| Encore | 1.5.17 |

| Rust | 1.91.1 |

| Elysia | 1.4.16 |

| Bun | 1.3.2 |

Machine specification

- Benchmark date: 14 Nov 2025

- CPU: Intel i7‑13700K

- RAM: DDR5 32 GB 5600 MHz

- OS: Debian 11 (bullseye) on WSL – 5.15.167.4‑microsoft‑standard‑WSL2

Benchmark results

After running the benchmark, we obtained the following results:

Benchmark result: Elysia is 2× faster than Encore

| Framework | Without Validation | With Validation |

|---|---|---|

| Encore | 139,033 | 95,854 |

| Elysia | 293,991 | 223,924 |

Using the original benchmark, Elysia outperforms Encore in all categories, achieving double the requests per second in all tests.

See a video‑proof step‑by‑step on how to run the benchmark.

How did we achieve this?

In the original benchmark, Elysia version was 1.1.16. Since then, we have made significant improvements to Elysia’s performance through various optimisations and enhancements.

But to summarise, in a single year, both Elysia and Bun have made significant performance improvements.

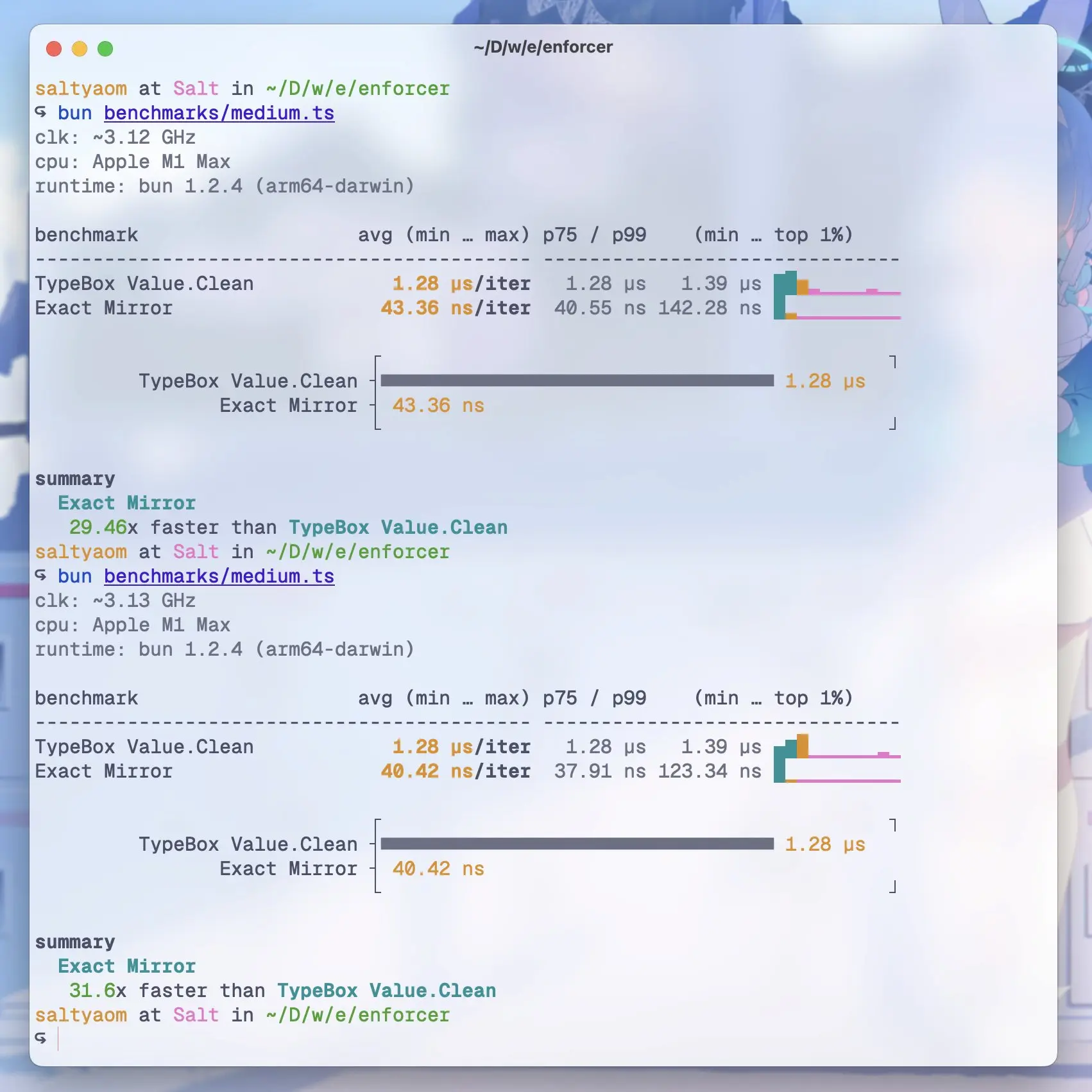

Exact Mirror

In the original Encore benchmark, Elysia performed a data normalisation on every request, which adds significant overhead to the request processing time.

The bottleneck is not in a data validation itself, but due to the normalisation process that involves dynamic data mutation.

In Elysia 1.3, we introduced Exact Mirror to accelerate data normalisation using JIT compilation instead of dynamic data mutation.

Exact Mirror run on medium size payload resulting in 30× faster

This significantly improves validation performance in Elysia.

General JIT optimisations

Between Elysia 1.1 (upstream) and 1.4 (current), we have made several JIT optimisations to improve the overall performance of Elysia, especially with Sucrose, our JIT compiler for Elysia. These optimisations include:

- Constant folding, inlining lifecycle events

- Reducing validation, and coercion overhead

- Minimising the overhead of middleware and plugins

- Improving the efficiency of internal data structures

- Reducing memory allocations during request processing

- Various other micro‑optimisations

In Bun 1.2.3, Bun also offers a built‑in routing in native code for better performance.

In Elysia 1.3, we leverage Bun’s native routing when possible to improve routing performance.

Bun compile

In the original benchmark, Elysia was not compiled for production.

Using bun compile optimises the Elysia application for production, resulting in significant performance improvements and reduced memory usage.

Conclusion

Through constant improvement, Elysia has optimised its performance significantly over the past 1.5 years, resulting in a framework that is now 2× faster than Encore in the same benchmark.

Benchmarks are not the only factor to consider when choosing a framework. Developer experience, ecosystem, and community support are also important factors to consider.

While the benchmark is hard to quantify, we have detailed our steps on how to run the benchmark, and recommend you to run the benchmark on your own machine for the most accurate results for your use case.

Run the benchmark on GitHub

Open source code of this article